We get it by now: Microservices are a great thing. Little else has been talked about in IT for a few years now, and it's a surprisingly simple idea that dividing a large, interdependent system into many small, lightweight systems can make software management easier.

Now comes the catch: Now that you've broken your monolith application into digestible morsels, how do you put them back together in a meaningful way? Unfortunately, there is not just one correct answer to this question, but rather, as is so often the case, multi-layered approaches that depend on the application and the individual requirements. The question is how should the microservices communicate with each other? Via service mesh? Via API gateway? Or via message queue? The overlap between API gateway and service meshes is significant. Both provide service discovery, request routing, authentication, throughput rate limiting, and monitoring functions. However, they show differences in their architectures and usage intentions. The main purpose of an API gateway is to accept traffic from outside their network and distribute it internally. The main purpose of a service mesh is to forward and manage traffic on their network.

What is actually the problem?

For that, a quick analysis of the problem: For microservices to work, they must overcome a long list of challenges as a distributed system:

Elasticity

There may be dozens or even hundreds of instances of a given microservice, any of which may fail at any given time for any number of reasons.

Load balancing and automatic scaling

With potentially hundreds of endpoints that can fulfill a requirement, routing and scaling are anything but trivial. In fact, one of the most effective cost-saving measures for large architectures is to increase the accuracy of routing and scaling decisions.

Service detection

The more complex and distributed an application is, the more difficult it becomes to find existing endpoints and establish a communication channel with them.

Tracking and monitoring

A single transaction in a microservice architecture can traverse multiple services, making it difficult to track its journey.

Versioning

As systems mature, it is critical to update available endpoints and APIs while ensuring that older versions remain available.

The solutions

In this article, we present three possible solutions to these problems, which are currently the most common: Service Meshes, API Gateways, and Message Queues. Of course, there are a number of other approaches that could be used, ranging from simple static load balancing to fixed IP addresses and centralized orchestration servers. However, in this paper we will focus on the most popular and in many ways most sophisticated options.

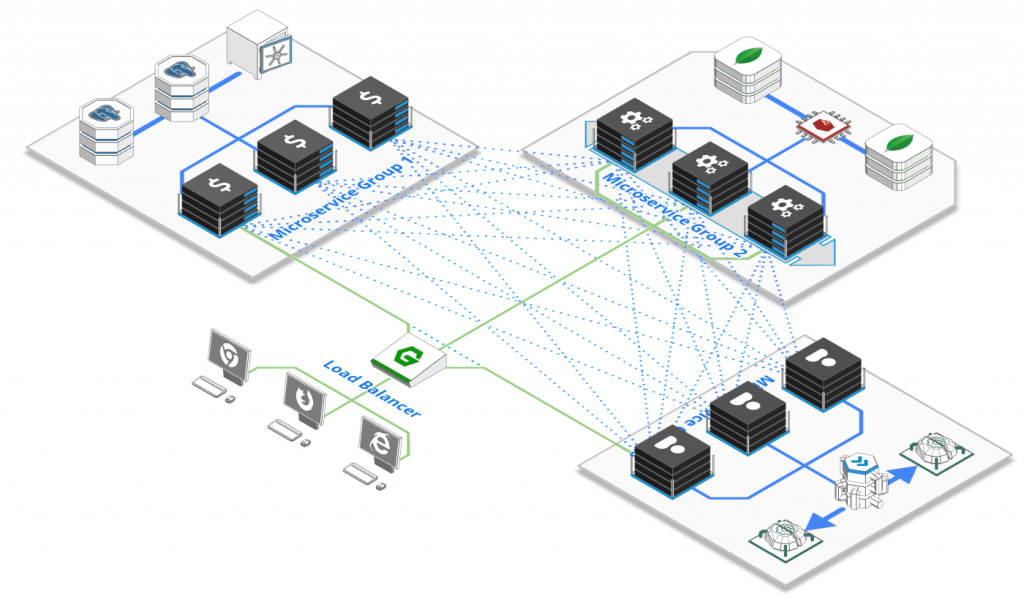

API Gateways

An API gateway is the big brother of the good old reverse proxy for HTTP calls. It is a scalable server, normally connected to the Internet, that can receive requests from both the public Internet and internal services and route them to the most appropriate microservice instance. API gateways provide a number of helpful features, including load balancing and integrity checks, API versioning and routing, request validation and authorization, data transformation, analysis, logging, SSL termination, and more. Examples of popular open source API gateways include. Kong or Tyk. Most cloud providers also offer their own implementation, e.g. AWS API Gateway, Azure Api Management or Google Cloud Endpoints.

The advantages

API gateways offer powerful features, are comparatively low in complexity, and are easy for seasoned web veterans to understand. They provide solid protection against the public Internet and perform many repetitive tasks such as user authentication or data validation.

The disadvantages

API gateways are fairly centralized. They can be deployed in a horizontally scalable manner. However, unlike service meshes, new APIs have to be registered or the configuration changed at a central location. From an organizational perspective, they should therefore also only be managed by one team.

Service meshes

Service meshes are decentralized, self-organizing networks between microservice instances that handle load balancing, endpoint discovery, integrity checks, monitoring, and tracing. They operate by adding a small agent to each instance, called a "sidecar." The service mesh mediates traffic and registration of instances, handles metrics collection and maintenance. While most service meshes are conceptually decentralized, they have one or more centralized elements to collect data or provide admin interfaces. Popular service mesh examples include Istio, Linkerd, or Hashicorp's Consul.

Advantages

Service meshes are more dynamic and can easily change shape to accommodate new features and endpoints. Their decentralized nature makes it easier to work on microservices in isolated teams.

Disadvantages

Service meshes are based on many moving parts and can therefore become very complex very quickly. For example, fully leveraging Istio requires deploying a separate traffic manager, telemetry collector, certificate manager, and sidecar process for each node. They are also a relatively recent development for something that should form the backbone of your IT architecture.

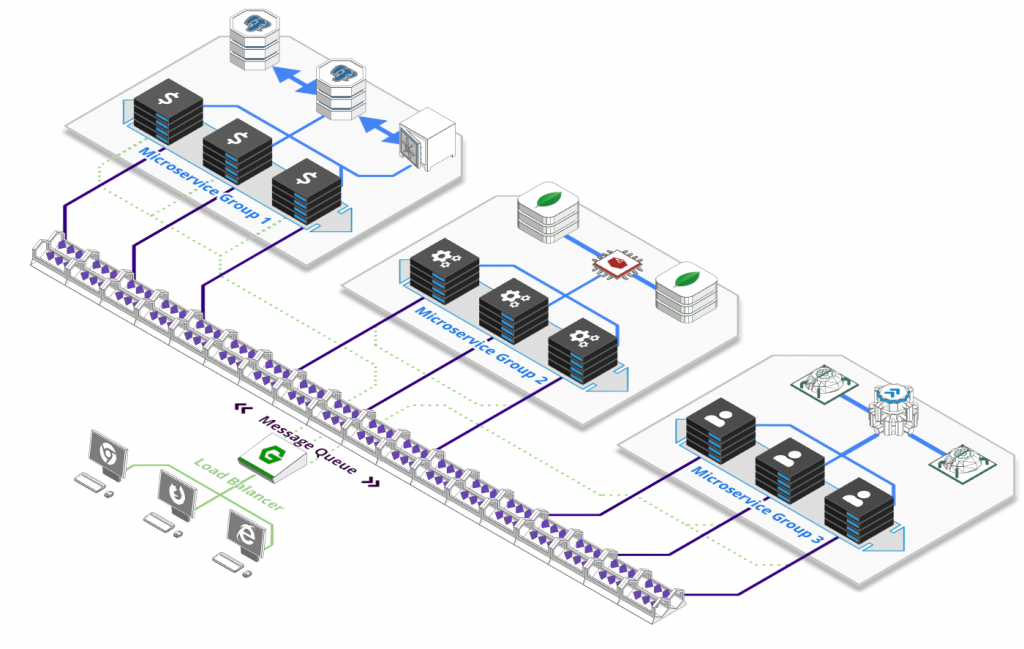

Message queues

At first glance, comparing service meshes to a message queue seems like comparing apples to oranges: They are completely different things, but they solve the same problem, albeit in very different ways.

With a message queue, you can establish complex communication patterns between services by decoupling senders and receivers. You accomplish this using a number of measures, such as topic-based routing or publish-subscribe messaging, as well as buffered processing, which makes it easier for multiple instances to handle different aspects of a task over time.

Message queues have been around forever, leading to a wide range of alternatives: Popular open source alternatives are Apache Kafka, AMQP brokers like RabbitMQ or HornetQ. But they are also provided by the respective cloud provider, such as AWS SQS or Kinesis, Google PubSub or Azure Service Bus.

Advantages

Simply decoupling senders and receivers is an effective concept that eliminates the need for a number of other concepts such as integrity checks, routing, endpoint detection, or load balancing. Instances can select relevant tasks from a buffered queue as soon as they are ready. This is particularly effective when automatic orchestration and scaling decisions are based on the number of messages in each queue, resulting in resource-efficient systems.

Disadvantages

Message queues are not good at request / response communication. Some allow you to match this to existing concepts, but it is not really what they are made for. Because of their buffering, they can also add significant latency to a system. They are also quite centralized (although horizontally scalable) and can be quite expensive on a large scale.

So, when should you choose which solution?

Actually, this is not necessarily an either-or decision. In fact, it may make perfect sense to provide the publicly available API with an API gateway, run a service mesh for inter-service communication, and support things with a message queue for asynchronous task scheduling. A service mesh can work with an API gateway to efficiently accept external traffic and then effectively forward that traffic once it is on the network. The combination of these technologies can be a powerful way to ensure application availability and resiliency while ensuring that your applications can be used with ease.

In a deployment with an API gateway and a service mesh, incoming traffic from outside the cluster is routed first through the API gateway and then into the mesh. The API gateway can handle authentication, edge routing, and other edge functions, while the service mesh provides detailed observation and control of your architecture.

However, if you want to focus only on communication between services, one possible answer could be:

- If you are already running an API gateway for your public-facing API, you can keep the complexity just as low and reuse it for communication between services.

- If you work in a large organization with isolated teams and poor communication, a service mesh gives you maximum independence so you can easily add new services over time.

- If you are designing a system where individual steps are spread out over time, such as a YouTube-like service where uploading, processing, and publishing videos can take a few minutes, use a message or task queue to do this.

What the future holds

Despite all the hype, service meshes are a fairly young concept, with Istio as the most popular alternative, but one that is rapidly evolving. In the future, service meshes like Istio can be expected to incorporate much of what you get from an API gateway today. The two concepts are increasingly being merged into one, resulting in a more decentralized network of services that provides both external API access and internal communication - possibly even in a time-buffered form.

ScaleUp is hosting provider for OpenSource Multi-cloud solutions based on OpenStack and Kubernetes, hosted at 100% in own data centers in Germany.