Kubernetes is software that enables the orchestration and monitoring of microservice containers at the cluster, service, pod, and container levels. As shown in our Introductory article Kubernetes (abbreviated as "K8s") connects various servers to form a cluster and orchestrates resources and workloads via it. In this article, we will now take a closer look at the structure and components of a Kubernetes cluster. Our article is divided into the sections:

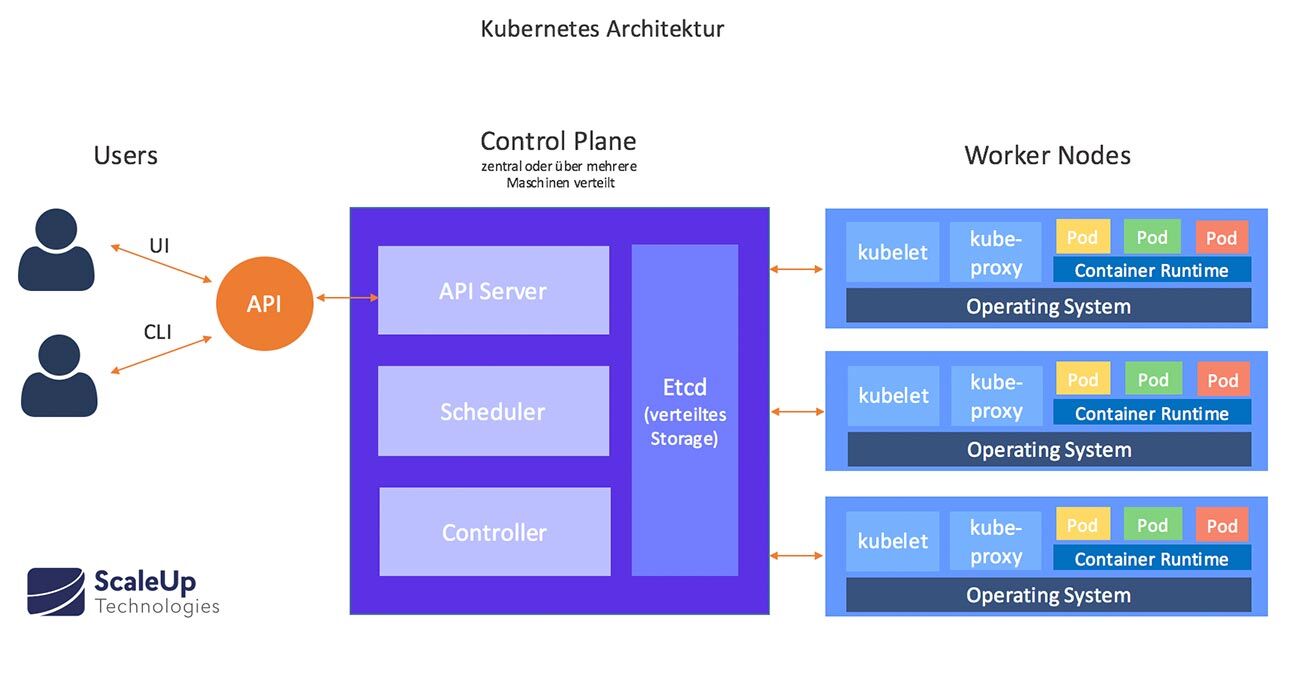

The main components of a K8s cluster are at least one Cluster Master (Control Plane) and several working machines, which are used as Nodes (engl. "node"). Nodes can be located on a physical or a virtual machine. Each node contains all the services needed to control pods.

The brain of the cluster resides in the Control Plane, where all cluster orchestration tasks are managed.

The Control Plane in turn consists of several components, the Master Nodes:

Master Nodes

Kube API Server

Is the central communication node of the cluster. As a front-end server, it provides REST endpoints for interactions with other cluster components.

All interactions with the cluster are executed via Kubernetes API calls. Kubernetes API calls can be executed directly via HTTPs or indirectly via commands in the Kubernetes command line client (kubectl) as well as the Kuberndetes UI (Dashboard).

Etcd

Etcd is the database backend and contains information about the cluster configuration, i.e. which nodes, resources are available within the cluster. The cluster state is also stored in Etcd.

Kube-Scheduler

Observes newly created pods that do not have a node assigned and selects a node on which to run them. Factors to consider include individual and collective resource requirements, hardware / software / policy constraints, data location, and dependencies between workloads and deadlines.

Kube Controller Manager

The Kube Controller Manager implements the main control loops. It manages resources and deployments, monitors differences between the current and desired cluster states, and makes the necessary changes to achieve the desired state. Actually, each controller is a separate process. However, to reduce complexity, they are all combined into a single binary and executed in a single process.

Controllers include:

Node Controller: Responsible for detecting and responding when nodes fail.

Replication Controller: Responsible for maintaining the correct number of pods for each replication controller object in the system.

Endpoint Controller: Populates the Endpoint object that connects Services & Pods.

Service Account and Token Controller: Create default accounts and API access tokens for new namespaces

Cloud Controller Manager

The cloud controller manager is connected to the cloud provider (in cloud-based Kubernetes clusters) and checks whether unresponsive nodes have been deleted by the cloud provider (via node controller), organizes routing via so-called route controllers that manage resources such as load balancers (service controllers) and disk storage (volume controllers).

Worker Nodes

Worker node components are executed on each node. They "maintain" running pods, so to speak, and determine the Kubernetes runtime environment.

Kubelet

An agent that runs on each node in the cluster. A Kubelet launches the pods using the available container engine (Docker, rkt, etc.) and periodically checks / reports pod status based on specific PodSpecs.

Kube proxy

Kube proxy checks maintenance of network rules on the host and performs connection forwarding at service endpoints.

Container Runtime

Operates the Contianer engine. Kubernetes supports multiple container engines: Docker, containerd, cri-o, rktlet, and any implementation of the Kubernetes CRI (Container Runtime Interface).

Containers therefore do not need their own guest operating system, which makes them particularly efficient and time-saving. Instead, each container gets its own thin layer of operating system functions.

Addons

Kubernetes components are further extended by addons. Addons are pods and services that provide a respective cluster feature. Addons can be managed at different deployment levels. The Kubernetes addons listed here are only a selection made based on their relevance:

Core DNS

Core DNS is an internal cluster DNS server. It automatically configures registers for Kubernetes namespaces, services, and pods. This makes it easier for pods to find other services in the cluster.

Web UI

Web UI (dashboard) is a common, web-based user interface for Kubernetes clusters. The Kubernetes dashboard provides functions for deploying, monitoring, and troubleshooting applications running in the cluster.

Monitoring tools for container resource management

Container Resource Monitoring records generic time-series measurement data about containers in a central database and provides a user interface for browsing this data at the container, pod, service, and cluster levels. This data is important for reliable operation and automatic scaling of clusters.

Several monitoring solutions are available in Kubernetes for application monitoring. By default, two separate pipelines are applicable for newly created clusters to collect monitoring statistics: The "Resource Metrics Pipeline" (Kubelet, cAdvisor) and "Full Metrics Pipeline" (captures significantly more metrics, e.g. Prometheus).

Cluster level logging

Stores container log files in a central log store and provides a search/browse interface.

It makes sense to store logs independently of containers and nodes at the cluster level, since this information would be lost if a container or node crashed or a pod was deleted.

For the Cluster level logging a separate backend is required to store, analyze, and query logs. Kubernetes does not provide a native storage solution for log data. However, there are many existing logging solutions that can be integrated into the Kubernetes cluster. Possible solutions include:

Node-Level Logging Agents: Runs on every node,

dedicated sidecar container for logging to log in to an application pod or

Automatic moving of application log files to the backend.

Further information on the logging topic can be found here: https://kubernetes.io/docs/concepts/cluster-administration/logging/

Kubernetes Cluster Deployment

A Kubernetes cluster deployment may require multiple master nodes and a separate etcd cluster to ensure high availability. Kubernetes also uses an overlay network to provide networking capabilities similar to a virtual machine-based environment. This software-defined network (SDN) enables communication between containers across the cluster and provides unique IP addresses for each container.

Conclusion

In principle, Kubernetes consists of a set of independent control processes that can be put together again and again to form new workflows, just like in a Lego construction kit. The control processes continuously monitor and change the current state of the container landscape and convert it to the target state. Orchestration thus becomes practically independent and a central control instance is no longer necessary. Applications can be developed, operated and scaled faster and more easily thanks to automated processes.

If your interest in this technology is piqued, you are welcome to contact us at Kubernetes test, a of our workshops visit or talk to us about your individual requirements.